Weekly Digest #159, 25 Jan 2026.

As tokenization moves into a critical financial market infrastructure, it can signal a similar shift for decentralised content provenance. Platforms and publishers would move from using it as an optional add-on to making it the default plumbing they quietly rely on.

Tokenization as a bedrock for content provenance and renewed trust in news

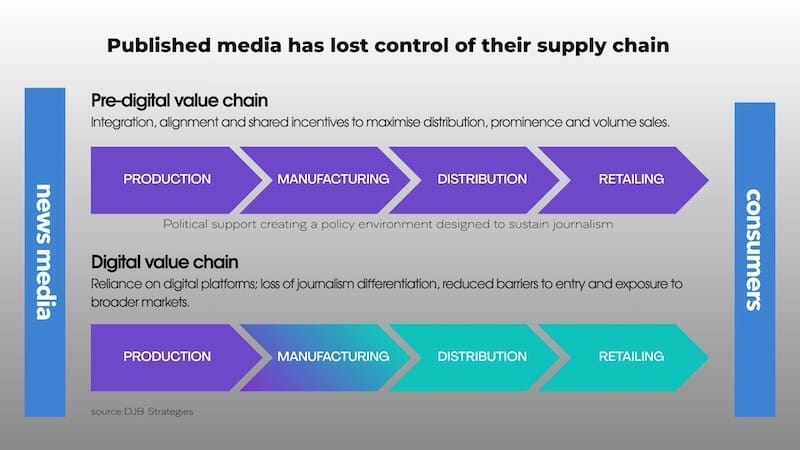

This week, I had an interesting conversation about how the media and creative industries could protect their content in the future, guarantee its provenance, and, above all, regain control over a value and supply chain that is increasingly slipping from their grasp into the hands of tech intermediaries.

The industry is turning to regulation and political support in the hope that it will solve its problem. It's a necessary, while uncertain prospect. The European regulatory preservation island is under attack from the US administration's repressive measures, and is also being criticised by Europeans themselves. Global alignment and multilateralism are being sacrificed on the altar of national sovereignty, and the ability of strategic alliances between middle powers to restore balance remains to be seen.

Building on these regulatory uncertainties, our conversation shifted to how sector best practices, rooted in shared industry protocols and standards, can instead drive change. Again, we drew a discouraging conclusion: organisations engage in isolated experimentation, adopt standards narrowly, remain dispersed as players, fail to reach consensus, and generally misunderstand how industrial standards support business prosperity.

The invisible tokenization revolution is the missing incentive for mainstream adoption of content-provenance protocols.

Against this backdrop, at the World Economic Forum at Davos and amidst debates over deep geopolitical tensions, cracks in American global leadership, with a bold statement by Canadian Prime Minister Mark Carney, and mounting pressure to rebuild growth and prosperity, some discussions have shed light on a path forward to down‑weight misinformation and restore trust in the news media grounded in content provenance. This path is rooted in the ongoing mainstream adoption of tokenization and stablecoins in finance.

At Davos 2026, many discussions focused on how tokenization has moved from a crypto experiment to a key part of global finance. Institutions, regulators, and governments now see it as essential market infrastructure.

Looking ahead, as tokenization moves into a critical financial market infrastructure, it can signal a similar shift for decentralised content provenance. Platforms and publishers would move from using it as an optional add-on to making it the default plumbing they quietly rely on. If tokenisation becomes commonplace in our monetary and financial systems, it will inevitably spread to all layers of the economy. This is a critical incentive for adopting standards on content provenance and for the fight against misinformation, both of which rely on the same accountable, transparent protocols enabled by shared ledger technologies.

The question is no longer whether tokenization belongs in mainstream finance, but how fast, safely, and inclusively we should implement and distill it through capillarity to all value-creation systems in the economy, from finance to advertising and ultimately to media and content creation.

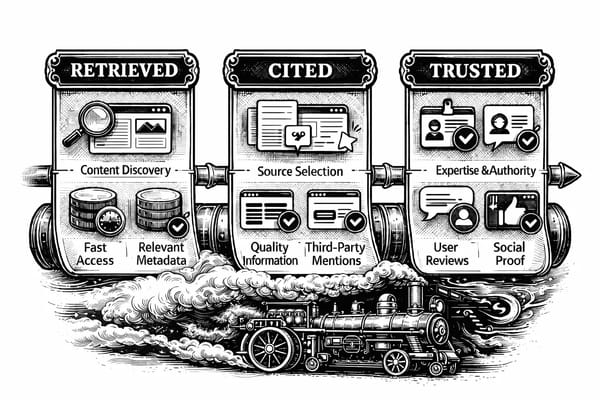

What tokenization adds to content provenance

- Cryptographic audit trail: Tokenized records or on-chain hashes create an immutable log of content creation and edits, similar to lifecycle histories for tokenized bonds and funds.

- Global identifiers and markets: Token-like content identifiers can integrate with decentralized identity and rights systems, enabling verifiable ownership, licensing, and fractional revenue-sharing of media assets.

- Interoperability: Open standards like C2PA, along with Verifiable Credentials and Decentralised Identifiers, such as the Global Media Identifier (GMI), provide a shared way for different platforms to verify provenance, much as financial token standards enable assets to move across chains and custodians.

Incentivised by financial and monetary markets, the bedrock of our economies, large platforms, standards bodies, and regulators will align around protocols such as C2PA and blockchain anchoring. Most professional media would then ship with cryptographically signed provenance manifests by default, just as most securities will gradually have by-default tokenized representations.

If provenance tokens or signed manifests become as standard as HTTPS, platforms and browsers could default to showing provenance “signals” and down‑weighting or flagging unverifiable media. That would not stop all misinformation, but it would make it easier to trust content with strong provenance (e.g., newsrooms' election coverage) and to question orphaned, unsigned media.

For content, the main questions will echo those in finance: who operates the key registries and chains, how decentralised they really are, and how to balance privacy with traceability. A likely outcome is a layered architecture: open standards (C2PA, Decentralised Identifiers or DIDs), multiple public or consortium chains for anchoring, and user‑facing tools that expose provenance simply while keeping the underlying tokenisation infrastructure largely invisible.

More to read or listen to in this edition:

👉 From Content Factories to Data Architects.

The shift from being a "content factory" to a "structured knowledge network" is the defining challenge of our time. News organisations are no longer merely competing with each other; they are competing with proactive, agentic systems that possess deeper context regarding the user’s life than the newsroom itself.

As AI commoditises the "tail end" of journalism—the packaging, distribution, and newsletter curation—the economic value of the content unit is trending toward zero. To survive, news organisations must move their value "upstream." This is the future of the industry: newsrooms acting as a "structured knowledge network" that unearths signals hidden within data that machines and humans can both utilise.

Journalism has traditionally sold two things: a solution to information scarcity and an editorial filter. In the AI era, this requires a transition into becoming "data gathering companies" that produce curated, structured, and contextualised data. The choice for media professionals is stark: remain a witness to the aftermath of events that have already occurred, or become the architects of the frameworks that help society interpret what may happen next. 2025 was the reckoning; 2026 will be the era of deployment. Are you building for history, or for anticipation?

👉 World Press Trends Outlook: Rising ‘three-pillar’ revenue model fuels industry optimism.

The continued focus and momentum on diversifying revenue streams over the last few years are helping publishers build a more balanced, sustainable business model, says WAN-IFRA in its latest World Press Trends Outlook report, grounded in findings from a comprehensive survey of senior media executives and newsroom leaders.

This year's report pictures a global news publishing industry in 2025-2026 in a paradox of structural decline and strategic optimism. Despite the persistent erosion of traditional print revenues and the disruptive influence of Generative AI, news media leaders report a three-year high in business confidence. Approximately 62.9% of executives are optimistic about the next 12 months, a figure that rises to 65.2% when looking toward a three-year horizon.

👉 Paid content revenue in Germany: continued significant growth to currently almost €1.7 billion per year.

Despite the odds, sales of paid content in Germany again grew at a double-digit rate last year (+15%). And not even mainly because of increased prices.

Roughly two-thirds of paid content revenue is generated by daily newspapers. For regional daily newspapers, e-paper accounts for the majority of revenue, with only 19% from premium subscriptions or other paywall products.

In contrast, digitalisation has progressed significantly further in the readership market for national newspapers. For the first time, national newspapers are generating more than half of their digital readership revenue through paywalls.

👉 The next major AI battleground is the classroom.

As Google, Microsoft and Anthropic race to make their tools the chatbots of choice for teachers and students, Brookings published a new study on kids, AI, and education. "It's not good!" And it shows that students and teachers may be losing trust in each other and that cognitive offloading is very real.

The authors identify a dual landscape of significant benefits, such as personalised tutoring and teacher productivity gains, alongside serious risks, such as cognitive dependency and social isolation. They confirm that AI can save teachers an average of 5.9 hours weekly on administrative tasks, but these gains only benefit students if the saved time is reinvested in relationship-building.

The authors call for a shift from passive acceptance to deliberate action via three pillars: Prosper (pedagogical integration), Prepare (literacy and planning), and Protect (safeguards and governance).